How to think about LLMs*

(*Most of the marketing materials just call it AI. It's in fact only a branch.)

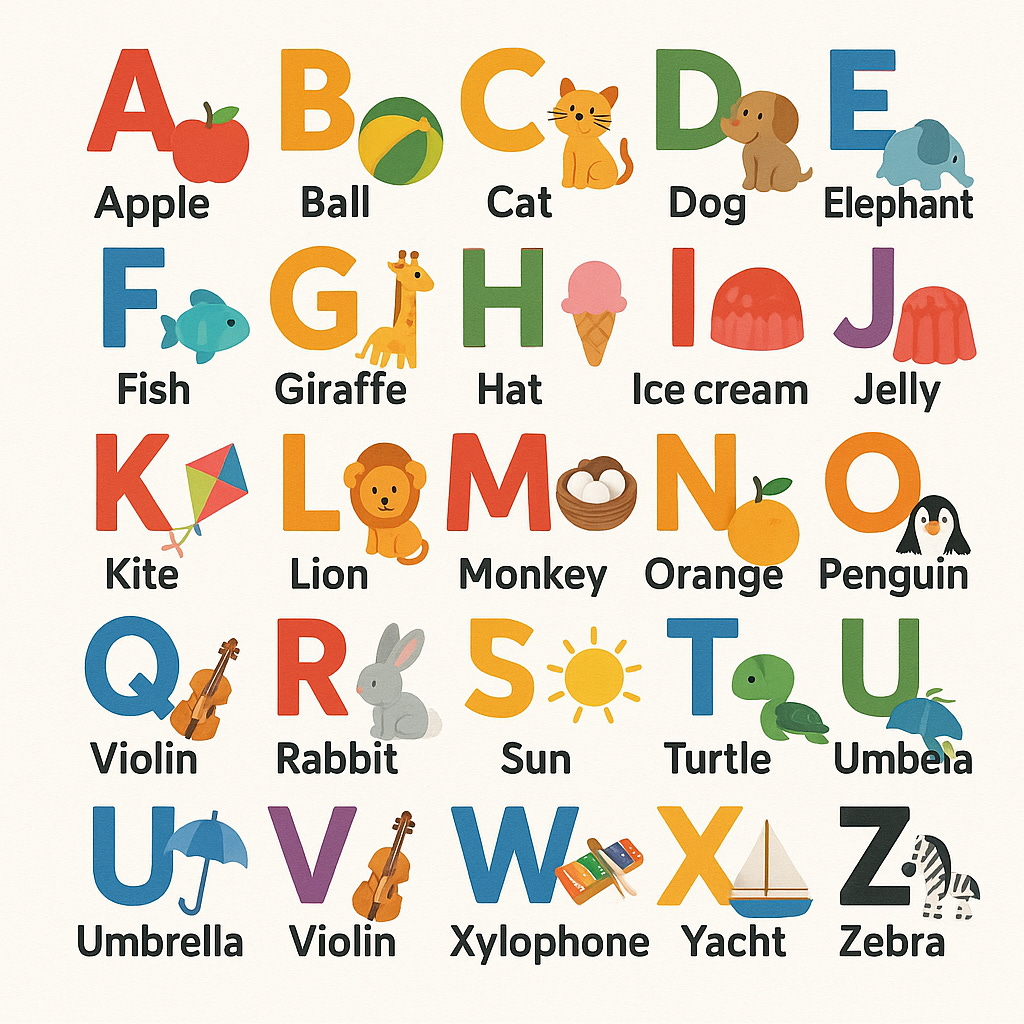

Below is a picture generated by ChatGPT o3 to show the alphabet letters paired with a word starting with that letter and an image of the word.

We can clearly see some mistakes, for example:

-Orange doesn't start with the letter N

-The images for the Hat and the Ice cream are wrong

The exercise is extremely easy for humans but seems tricky for LLMs.

It would be easy to draw a pessimistic conclusion for LLMs, but it's definitely not useful to do that. I am referring to the latest wave of criticism of LLMs following the famous paper from Apple, "The Illusion of Thinking".

LLMs are not human brains.

It's not pertinent to compare LLMs' reasoning with humans' thinking.

They are tools. We need to know what they are good at and benefit from it, and we need to know what they are not good at and be mindful about it.

Bottom line, LLMs have the compressed internet inside of their parameters (think of it as their knowledge). This means that for subjects that we can find good content on the internet, usually we can also get good answers from LLMs (for example, enterprise content).

Then LLMs are fine-tuned with humans' preferences, and behave like a helpful assistant. The latest models are further trained with a technique called reinforcement learning to be really good at math and coding.

At last, LLMs can spend more energy and data on one subject by reasoning, which gives better results like ChatGPT o3 and DeepSeek R1. It takes more time for them to answer, but for problem-solving tasks, it gives good results. They can also use tools like internet search and coding, which give them great capability for tasks like research and data analytics.

In short, setting aside the hype, they are great tools for information searching, summarisation, calculation, coding, writing, and ideation. There is a very good chance of getting good results for subjects where there is great content on the internet, or on subjects like business, math, and coding (which they have been further trained in).

Lastly, LLMs are still fundamentally probabilistic tools. Always keep an eye on the results. Call an expert to check if needed.